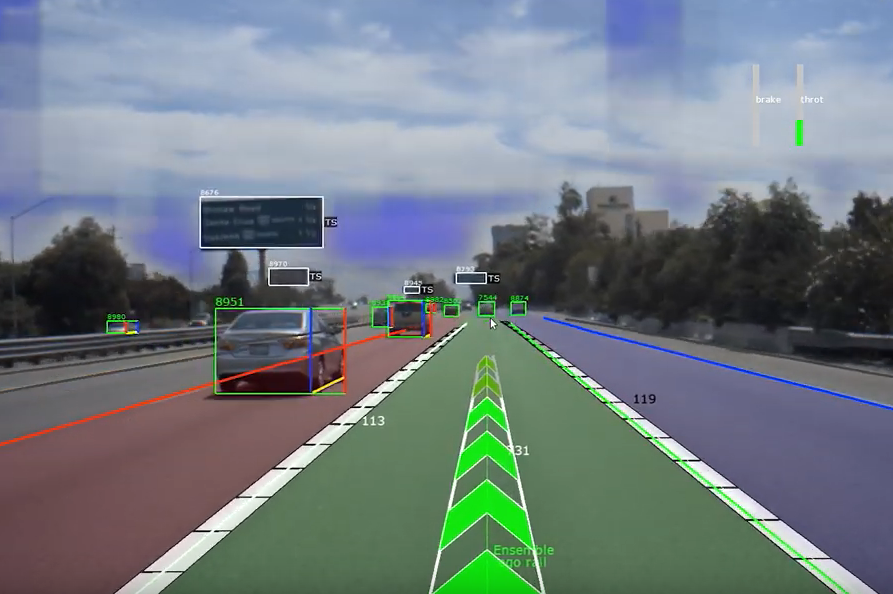

Deep learning research in autonomous driving at NVIDIA

Applying state of the art deep learning research and advanced computing hardware to create the next generation of autonomous vehicles. Specific projects including 1) Develop models for dynamic/static object dection, e.g. vehicles, obstacles, road boundary, road marks, signs/lights, etc; 2) predicting the future driving trajectory based on camera perception, the latest model has achieved nearly 1 million meters between disengagements on highway; 3)build and maintain the AI infrastructure for research and development engineering; 4) learning the uncertainty of path prediction by estimating the distribution of drivable trajectory from human-drive data; 5) active learning for sampling diverse and effective data points to improve models over time; 6) developing a novel multi-resolution image patch for model's long-range perception; 7) R&D on sensor fusion models and algorithms for camear, radar and lidar. 8) building distributed training pipeline and dataloader infrastructure in PyTorch; 9) developing world model and learning driving policy from human-drive data (collaboration with Dr. Yann Lecun and Dr. Alfredo Canziani); 10) estimating the amount of data required for training models to achieve a certain level of failure error rate (collaboration with Dr. Anna Choromanska's group). [Video list]

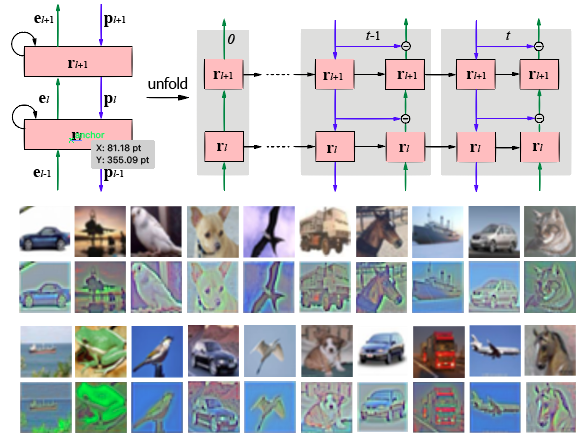

Design brain-inspired deep neural network based on neurosience theory

Inspired by predictive coding in neuroscience, we designed bi-directional and recurrent neural nets, namely deep predictive coding networks (PCN). It uses convolutional layers in both feedforward and feedback networks, and recurrent connections within each layer. Feedback connections from a higher layer carry the prediction of its lower-layer representation; feedforward connections carry the prediction errors to its higher-layer. Given image input, PCN runs recursive cycles of bottom-up and top-down computation to update its internal representations to reduce the difference between bottom-up input and top-down prediction at every layer. After multiple cycles of recursive updating, the representation is used for image classification. We implemented the recurrent process in both global and local modes. In training, the classification error backpropagates across layers and in time. With benchmark data, PCN was found to always outperform its feedforward-only counterpart: a model without any mechanism for recurrent dynamics, and its performance tended to improve given more cycles of computation over time. In short, PCN reuses a single architecture to recursively run bottom-up and top-down process, enabling an increasingly longer cascade of non-linear transformation. For image classification, PCN refines its representation over time towards more accurate and definitive recognition. [Paper 1][Paper 2] [Video] [Github]

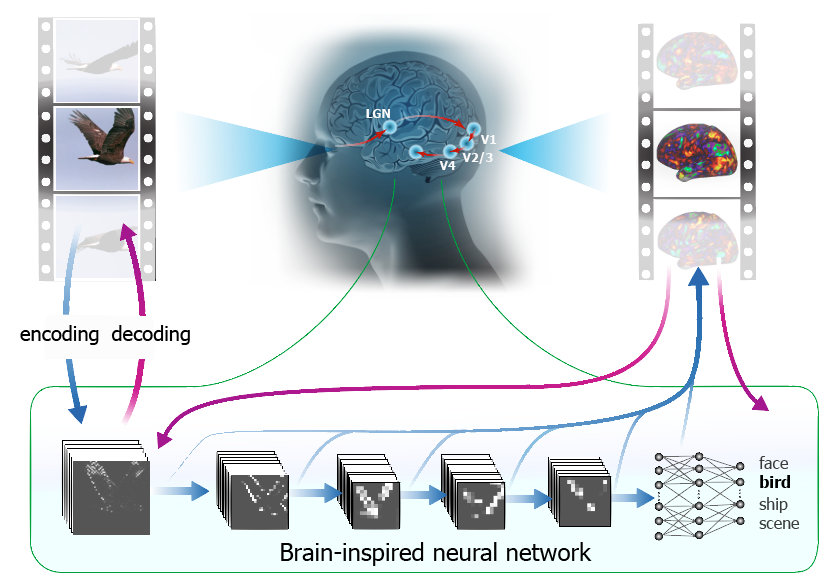

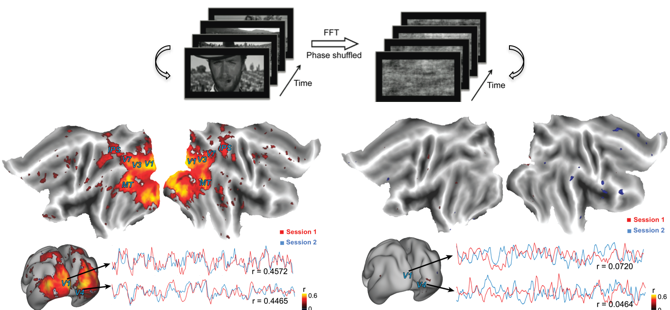

Neural Encoding and Decoding with Deep Learning for Dynamic Natural Vision

Convolutional neural network (CNN) driven by image recognition has been shown to be able to explain cortical responses to static pictures at ventral-stream areas. Here, we further showed that such CNN could reliably predict and decode functional magnetic resonance imaging data from humans watching natural movies, despite its lack of any mechanism to account for temporal dynamics or feedback processing. Using separate data, encoding and decoding models were developed and evaluated for describing the bi-directional relationships between the CNN and the brain. Through the encoding models, the CNN-predicted areas covered not only the ventral stream, but also the dorsal stream, albeit to a lesser degree; single-voxel response was visualized as the specific pixel pattern that drove the response, revealing the distinct representation of individual cortical location; cortical activation was synthesized from natural images with high-throughput to map category representation, contrast, and selectivity. Through the decoding models, fMRI signals were directly decoded to estimate the feature representations in both visual and semantic spaces, for direct visual reconstruction and semantic categorization, respectively. These results corroborate, generalize, and extend previous findings, and highlight the value of using deep learning, as an all-in-one model of the visual cortex, to understand and decode natural vision. [Paper] [Video 1] [Video 2] [Video 3]

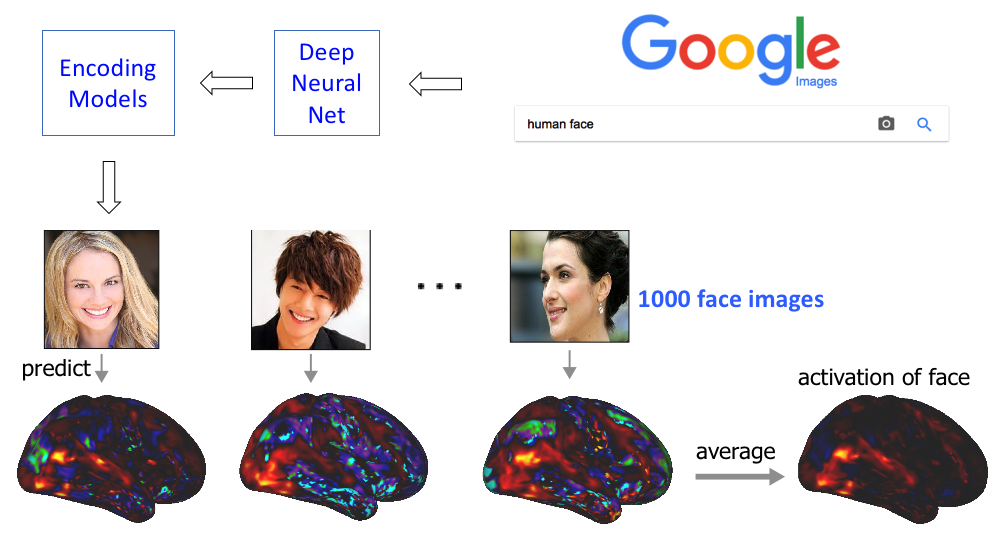

Deep neural network predicts cortical representation and organization of visual features

The brain represents visual objects with topographic cortical patterns. To address how distributed visual representations enable object categorization, we established predictive encoding models based on a deep residual neural network, and trained them to predict cortical responses to natural movies. Using this predictive model, we mapped human cortical representations to 64,000 visual objects from 80 categories with high throughput and accuracy. Such representations covered both the ventral and dorsal pathways, reflected multiple levels of object features, and preserved semantic relationships between categories. In the entire visual cortex, object representations were modularly organized into three categories: biological objects, non-biological objects, and background scenes. In a finer scale specific to each module, object representations revealed sub-modules for further categorization. These findings suggest that increasingly more specific category is represented by cortical patterns in progressively finer spatial scales. Such a nested hierarchy may be a fundamental principle for the brain to categorize visual objects with various levels of specificity, and can be explained and differentiated by object features at different levels. [Paper] [Slices]

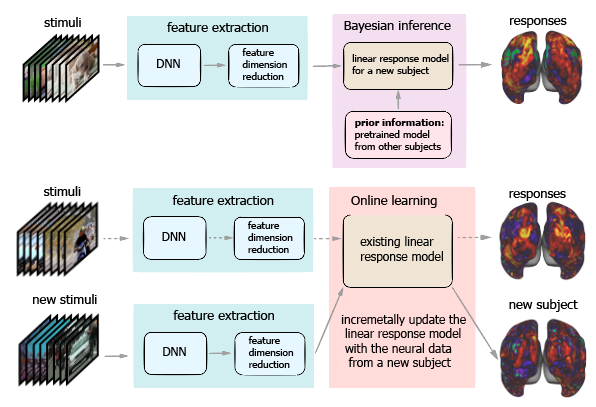

Transferring and Generalizing Deep-Learning-based Neural Encoding Models across Subjects

Recent studies have shown the value of using deep learning models for mapping and characterizing how the brain represents and organizes information for natural vision. However, modeling the relationship between deep learning models and the brain (or encoding models), requires measuring cortical responses to large and diverse sets of natural visual stimuli from single subjects. This requirement limits prior studies to few subjects, making it difficult to generalize findings across subjects or for a population. In this study, we developed new methods to transfer and generalize encoding models across subjects. To train encoding models specific to a subject, the models trained for other subjects were used as the prior models and were refined efficiently using Bayesian inference with a limited amount of data from the specific subject. To train encoding models for a population, the models were progressively trained and updated with incremental data from different subjects. For the proof of principle, we applied these methods to functional magnetic resonance imaging (fMRI) data from three subjects watching tens of hours of naturalistic videos, while deep residual neural network driven by image recognition was used to model the visual cortical processing. Results demonstrate that the methods developed herein provide an efficient and effective strategy to establish subject-specific or population-wide predictive models of cortical representations of high-dimensional and hierarchical visual features. [Paper] [Video]

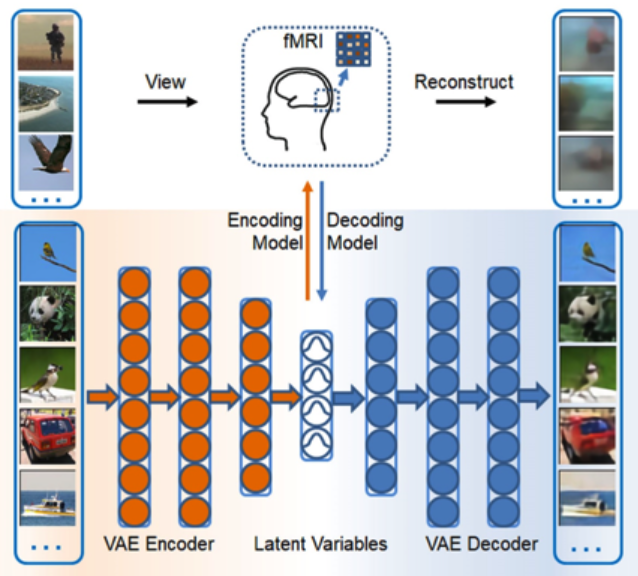

Variational auto-encoder: an unsupervised model for encoding and decoding fMRI activity

Goal-driven and feedforward-only convolutional neural networks (CNN) have been shown to be able to predict and decode cortical responses to natural images or videos. Here, we explored an alternative deep neural network, variational auto-encoder (VAE), as a computational model of the visual cortex. We trained a VAE with a five-layer encoder and a five-layer decoder to learn visual representations from a diverse set of unlabeled images. Inspired by the “free-energy” principle in neuroscience, we modeled the brain’s bottom-up and top-down pathways using the VAE’s encoder and decoder, respectively. Following such conceptual relationships, we used VAE to predict or decode cortical activity observed with functional magnetic resonance imaging (fMRI) from three human subjects passively watching natural videos. Compared to CNN, VAE resulted in relatively lower accuracies for predicting the fMRI responses to the video stimuli, especially for higher-order ventral visual areas. However, VAE offered a more convenient strategy for decoding the fMRI activity to reconstruct the video input, by first converting the fMRI activity to the VAE’s latent variables, and then converting the latent variables to the reconstructed video frames through the VAE’s decoder. This strategy was more advantageous than alternative decoding methods, e.g. partial least square regression, by reconstructing both the spatial structure and color of the visual input. Findings from this study support the notion that the brain, at least in part, bears a generative model of the visual world. [Paper]

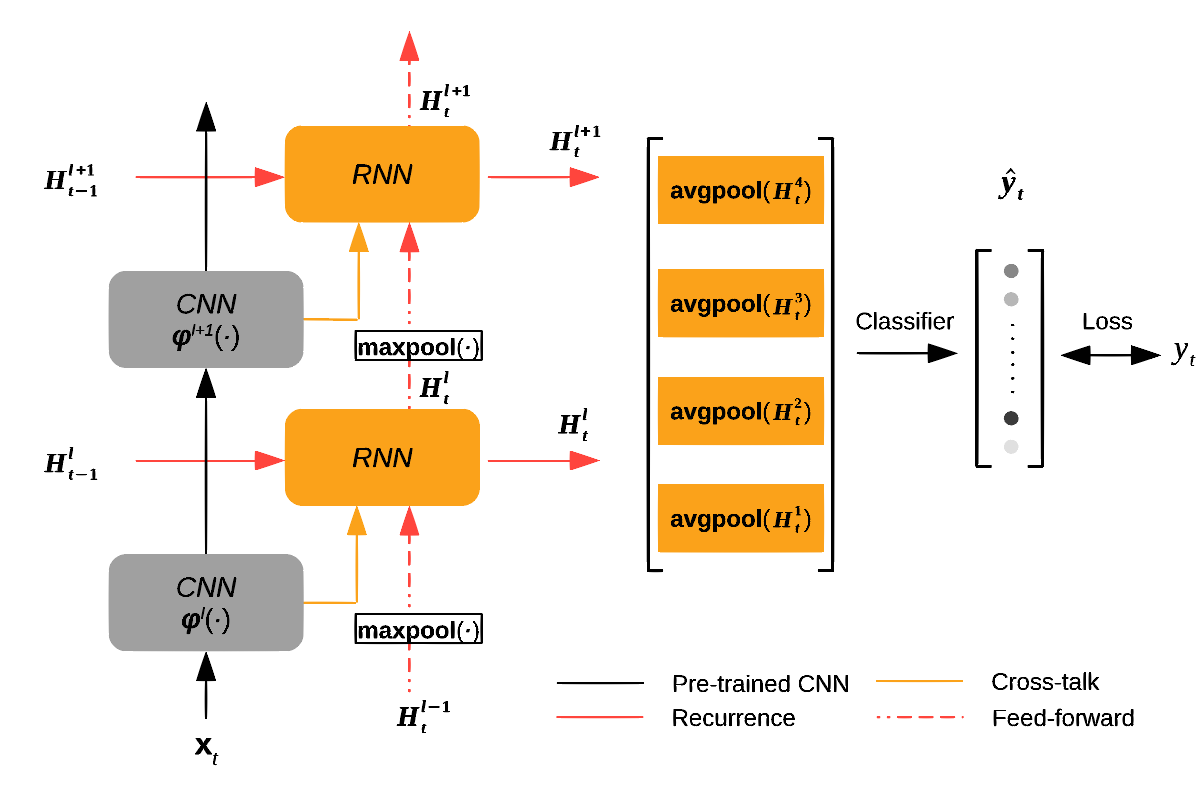

Deep recurrent neural net reveals a hierarchy of process memory

The human visual cortex extracts both spatial and temporal visual features to support perception and guide behavior. Deep convolutional neural networks (CNNs) provide a computational framework to model cortical representation and organization for spatial visual processing, but unable to explain how the brain processes temporal information. To overcome this limitation, we extended a CNN by adding recurrent connections to different layers of the CNN to allow spatial representations to be remembered and accumulated over time. The extended model, or the recurrent neural network (RNN), embodied a hierarchical and distributed model of process memory as an integral part of visual processing. Unlike the CNN, the RNN learned spatiotemporal features from videos to enable action recognition. The RNN better predicted cortical responses to natural movie stimuli than the CNN, at all visual areas especially those along the dorsal stream. As a fully-observable model of visual processing, the RNN also revealed a cortical hierarchy of temporal receptive window, dynamics of process memory, and spatiotemporal representations. These results support the hypothesis of process memory, and demonstrate the potential of using the RNN for in-depth computational understanding of dynamic natural vision. [Paper] [Video]

Spontaneous activity is organized by visual streams

Large-scale functional networks have been extensively studied using resting state functional magnetic resonance imaging. However, the pattern, organization, and function of fine-scale network activity remain largely unknown. In this project, we characterized the spontaneously emerging visual cortical activity by applying independent component analysis to resting state fMRI signals exclusively within the visual cortex. In this sub-system scale, we observed about 50 spatially independent components that were reproducible within and across subjects, and analyzed their spatial patterns and temporal relationships to reveal the intrinsic parcellation and organization of the visual cortex. The resulting visual cortical parcels were aligned with the steepest gradient of cortical myelination, and were organized into functional modules segregated along the dorsal/ventral pathways and foveal/peripheral early visual areas. Cortical distance could partly explain intra-hemispherical functional connectivity, but not inter-hemispherical connectivity; after discounting the effect of anatomical affinity, the fine-scale functional connectivity still preserved similar visual-stream-specific modular organization. Moreover, cortical retinotopy, folding, and cytoarchitecture impose limited constraints to the organization of resting state activity. Given these findings, we conclude that spontaneous activity patterns in the visual cortex are primarily organized by visual streams, likely reflecting feedback network interactions. [Paper]

Influences of high-level features, gaze, and scene transitions on cortical responses to natural movies

Complex, sustained, dynamic, and naturalistic visual stimulation can evoke distributed brain activities that are highly reproducible within and across individuals. However, the precise origins of such reproducible responses remain incompletely understood. Here, we employed concurrent functional magnetic resonance imaging (fMRI) and eye tracking to investigate the experimental and behavioral factors that influence fMRI activity and its intra- and inter-subject reproducibility during repeated movie stimuli. We found that widely distributed and highly reproducible fMRI responses were attributed primarily to the high-level natural content in the movie. In the absence of such natural content, low-level visual features alone in a spatiotemporally scrambled control stimulus evoked significantly reduced degree and extent of reproducible responses, which were mostly confined to the primary visual cortex (V1). We also found that the varying gaze behavior affected the cortical response at the peripheral part of V1 and at areas in the oculomotor network, with minor effects on the response reproducibility over the extrastriate visual areas. Lastly, scene transitions in the movie stimulus due to film editing partly caused the reproducible fMRI responses at widespread cortical areas, especially along the ventral visual pathway. Therefore, the naturalistic nature of a movie stimulus is necessary for driving highly reliable visual activations. In a movie-stimulation paradigm, scene transitions and individuals’ gaze behavior should be taken as potential confounding factors in order to properly interpret cortical activity that supports natural vision. [Paper]

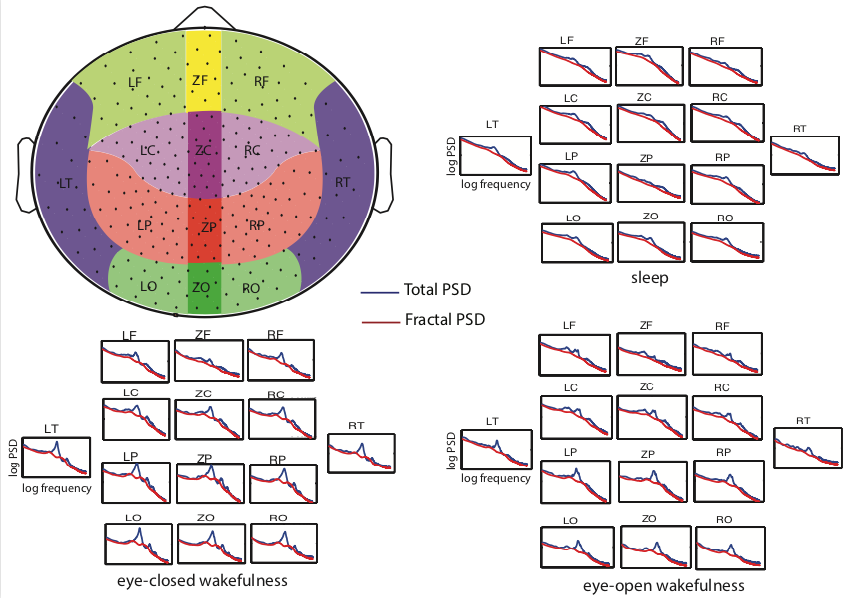

Separation of oscillatory and fractal dynamics in electrophysiological signals

Neurophysiological field-potential signals consist of both arrhythmic and rhythmic patterns indicative of the fractal and oscillatory dynamics arising from likely distinct mechanisms. We developed a new method, namely the Irregular-Resampling Auto-Spectral Analysis (IRASA), to separate fractal and oscillatory components in the power spectrum of neurophysiological signal according to their distinct temporal and spectral characteristics. In this method, we irregularly resampled the neural signal by a set of non-integer factors, and statistically summarized the auto-power spectra of the resampled signals to separate the fractal component from the oscillatory component in the frequency domain. We tested this method on simulated data and demonstrated that IRASA could robustly separate the fractal component from the oscillatory component. In addition, applications of IRASA to macaque electrocorticography (ECoG) and human magnetoencephalography (MEG) data revealed a greater power-law exponent of fractal dynamics during sleep compared to wakefulness. The temporal fluctuation in the broadband power of the fractal component revealed characteristic dynamics within and across the eyes-closed, eyes-open and sleep states. These results demonstrate the efficacy and potential applications of this method in analyzing electrophysiological signatures of large-scale neural circuit activity. This method has been included in FieldTrip, an open-source toolbox for electrophysiological data analysis used by thousands of users. [Paper] [Video]

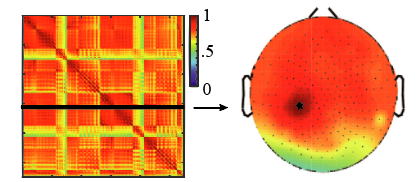

Scale-free electrophysiology contributes to global fMRI

Spontaneous activity observed with resting-state fMRI is used widely to uncover the brain’s intrinsic functional networks in health and disease. Although many networks appear modular and specific, global and nonspecific fMRI fluctuations also exist and both pose a challenge and present an opportunity for characterizing and understanding brain networks. Here, we used a multimodal approach to investigate the neural correlates to the global fMRI signal in the resting state. Like fMRI, resting-state power fluctuations of broadband and arrhythmic, or scale-free, macaque electrocorticography (EEG) and human magnetoencephalography (MEG) activity were correlated globally. The power fluctuations of scale-free human EEG were coupled with the global component of simultaneously acquired resting-state fMRI, with the global hemodynamic change lagging the broadband spectral change of EEG by 5s. The levels of global and nonspecific fluctuation and synchronization in scale-free population activity also varied across and depended on arousal states. Together, these results suggest that the neural origin of global resting-state fMRI activity is the broadband power fluctuation in scale-free population activity observable with macroscopic electrical or magnetic recordings. Moreover, the global fluctuation in neuro- physiological and hemodynamic activity is likely modulated through diffuse neuromodulation pathways that govern arousal states and vigilance levels. [Paper]

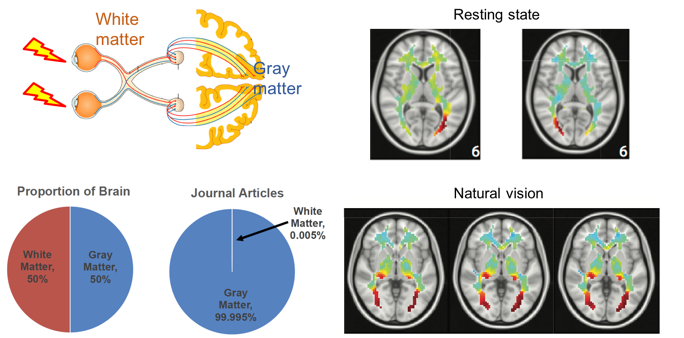

Imaging white-matter functional organization

Despite wide applications of functional magnetic resonance imaging (fMRI) to mapping brain activation and connectivity in cortical gray matter, it has rarely been utilized to study white-matter functions. In this project, we investigated the spatiotemporal characteristics of fMRI data within the white matter acquired from humans in the resting state or watching a naturalistic movie. By using independent component analysis and hierarchical clustering, resting-state fMRI data in the white matter were denoised and decomposed into spatially independent components, further assembled into hierarchically organized axonal fiber bundles. Interestingly, such components were partly reorganized during natural vision. Relative to the resting state, the visual task specifically induced a stronger degree of temporal coherence within the optic radiations, as well as significant correlations between the optic radiations and multiple cortical visual networks. Therefore, fMRI contains rich functional information about activity and connectivity within white matter at rest and during tasks, challenging the conventional practice of taking white-matter signals as noise or artifacts. [Paper]

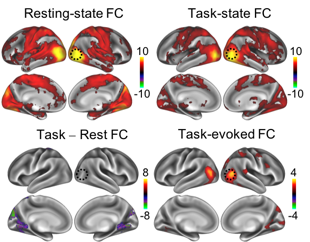

Task-evoked functional connectivity using fMRI

During complex tasks, patterns of functional connectivity differ from those in the resting state. However, what accounts for such differences remains unclear. Brain activity during a task reflects an unknown mixture of spontaneous and task-evoked activities. The difference in functional connectivity between a task state and the resting state may reflect not only task-evoked functional connectivity, but also changes in spontaneously emerging networks. Here, we characterized the differences in apparent functional connectivity between the resting state and when human subjects were watching a naturalistic movie. Such differences were marginally explained by the task-evoked functional connectivity involved in processing the movie content. Instead, they were mostly attributable to changes in spontaneous networks driven by ongoing activity during the task. The execution of the task reduced the correlations in ongoing activity among different cortical networks, especially between the visual and non-visual sensory or motor cortices. Our results suggest that task-evoked activity is not independent from spontaneous activity, and that engaging in a task may suppress spontaneous activity and its inter-regional correlation. [Paper]

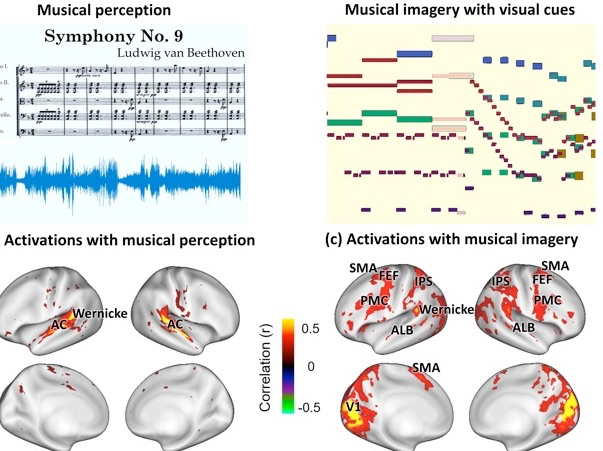

Musical Imagery Involves Wernicke’s area in Bilateral and Anti-Correlated Network Interactions

Musical imagery is the human experience of imagining music without actually hearing it. The neural basis of this mental ability is unclear, especially for musicians capable of engaging in accurate and vivid musical imagery. In this project, we created a visualization of an 8-minute symphony as a silent movie and used it as real-time cue for musicians to continuously imagine the music for repeated and synchronized sessions during functional magnetic resonance imaging (fMRI). The activations and networks evoked by musical imagery were compared with those elicited by the subjects directly listening to the same music. Musical imagery and musical perception resulted in overlapping activations at the anterolateral belt and Wernicke’s area, where the responses were correlated with the auditory features of the music. Whereas Wernicke’s area interacted within the intrinsic auditory network during musical perception, it was involved in much more complex networks during musical imagery, showing positive correlations with the dorsal attention network and the motor-control network and negative correlations with the default-mode network. Our results highlight the important role of Wernicke’s area in forming vivid musical imagery through bilateral and anti-correlated network interactions, challenging the conventional view of segregated and lateralized processing of music versus language. [Paper]

* See other past projects in the Laboratory of Integrated Brain Imaging (LIBI) at Purdue University.